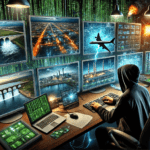

As tensions between Iran and Israel erupt into open conflict, the world is not only witnessing a military confrontation—but also a collision of narratives, weaponized through artificial intelligence. In this new era of warfare, truth has become one of the first casualties. AI-generated images, deepfake videos, and synthetic news are circulating at unprecedented speed, blurring the line between real and fake in ways that affect both local perceptions and international responses. Yet this phenomenon is not unique to the Middle East. From Russia’s invasion of Ukraine to Chinese influence operations targeting Taiwan and Western elections, the Iran–Israel conflict is only the latest stage in a global trend: the strategic misuse of AI to control the information battlefield. This article explores how AI is reshaping war not just as a tool, but as a terrain—and what that means for truth, diplomacy, and global security.

When State and Non-State Actors Weaponize AI Images

This is no isolated phenomenon. Both Iranian and Israeli state media have circulated doctored visuals to influence domestic and international audiences—often presenting AI-manipulated scenes as undeniable evidence. Meanwhile, research indicates that Iran-backed campaigns on TikTok employ generative AI to frame narratives—heightening public confusion amid government-imposed internet blackouts. The result is a curated reality where government oversight and algorithmic amplification reinforce each other, further obfuscating objective truth.

The Strategic Consequences of AI-Augmented Misinformation

The rise of AI-augmented misinformation in the Iran–Israel conflict carries deep strategic implications. One of the most immediate effects is the erosion of public trust. When both governments and non-state actors flood the information ecosystem with conflicting AI-generated imagery and fabricated narratives, it becomes increasingly difficult for global audiences—and even the citizens of those nations—to determine what is real. This ambiguity weakens public pressure for accountability and de-escalation, as uncertainty replaces clarity.

Alongside this is the risk of policy paralysis. Misleading content that falsely attributes civilian casualties or military actions can distort international perception, influencing foreign policy decisions based on misinformation. Diplomatic channels become congested not only by strategic posturing, but by the fear that any intervention might be grounded in an inaccurate portrayal of events. The potential for miscalculation rises dramatically when real-time decision-making is contaminated by false data.

Compounding these effects is the growing overlap between information warfare and cyber operations. The digital theater is no longer just about perception—it has become a battleground in its own right. Both Iranian and Israeli cyber operations have intensified, with each side deploying bot networks, coordinated propaganda campaigns, and AI-generated content to shape domestic and international narratives. Iranian campaigns have focused on amplifying anti-Israel sentiment through manipulated media, while Israeli operations have reportedly included targeted influence efforts aimed at undermining Iranian public morale and promoting narratives of deterrence. These actions illustrate how AI-powered propaganda is not only a tool of psychological warfare, but also a means of destabilizing strategic equilibrium across borders.

Looking at the Bigger Picture: A Pattern of AI Abuses

These developments fit a troubling global pattern. In Gaza, Israel deployed AI for military targeting—using surveillance systems like “Gospel” and “Lavender”—sparking concerns about civilian harm and lack of accountability.

In the broader landscape of geopolitical information warfare, Western democracies have increasingly become targets of AI-enhanced disinformation campaigns orchestrated by state-linked actors in Russia, Iran, and China. These campaigns frequently deploy networks of bots and fake personas to spread manipulated content across platforms like Facebook, X, TikTok, and Telegram, especially during politically sensitive periods such as elections, protests, or international crises. In the United States, Russian bot farms linked to the Internet Research Agency exploited AI-generated memes and synthetic personas during the 2016 and 2020 elections, aiming to exacerbate racial and political divisions. Similarly, during the 2019–2021 protests in France, Iran-linked accounts circulated AI-modified videos that portrayed French police brutality in exaggerated or fabricated ways, seeking to delegitimize Western democratic norms. China’s “Spamouflage” network has also weaponized AI to flood social media with fake videos and articles intended to discredit Western criticism of Beijing’s actions in Xinjiang and Taiwan.

What makes these operations more potent today is not merely the volume of content, but its emotional precision and mimicry of real public discourse. AI enables rapid generation of text, images, and even video that appears convincingly human, making detection harder and amplification more viral. These techniques were deployed in coordinated campaigns during the COVID-19 pandemic, where Iranian and Chinese sources promoted vaccine misinformation targeting Western governments, often by impersonating Western doctors or journalists. Similarly, around the time of Russia’s 2022 invasion of Ukraine, fake videos purportedly showing Ukrainian soldiers committing war crimes circulated widely—some of them produced using deepfake tools and spread through bot accounts on Telegram and VKontakte. The cumulative effect of these campaigns is not only to mislead, but to exhaust public trust in the very idea of truth, creating what some analysts call a “perception battlefield” where reality becomes a matter of alignment, not fact.

This pattern of AI-powered disinformation across conflict zones and political systems suggests that the Iran–Israel case is part of a much larger transformation in the nature of war and propaganda. From Kyiv to California, Taipei to Tehran, the use of synthetic media and algorithmically driven influence campaigns has become a defining feature of 21st-century power projection. It is not simply a matter of propaganda volume—it is the strategic weaponization of believability, made scalable by AI.

Broader Implications and Policy Challenges

The misuse of AI-generated misinformation in the Iran–Israel conflict is not an isolated phenomenon—it reflects a broader and deepening crisis in the global information order. As computational propaganda becomes more sophisticated, regulators and digital platforms are under increasing pressure to adapt. Current content moderation systems are often reactive and ill-equipped to detect AI-generated forgeries in real time. To counteract this, both governments and technology companies must prioritize the development of advanced tools capable of flagging synthetic media and enforcing transparency around content origins.

Simultaneously, the infrastructure for verification must be urgently strengthened. Established fact-checking organizations such as Deutsche Welle and Agence France-Presse play a vital role, but their efforts must scale up dramatically. By integrating AI-assisted detection into journalistic workflows, these institutions can more quickly and effectively identify recycled, manipulated, or entirely fabricated content before it spreads unchecked across social platforms.

At the international level, the growing prevalence of AI-powered disinformation underscores the need for new legal and ethical frameworks. Much like arms control agreements in previous eras, global norms must now emerge to regulate the use of artificial intelligence in warfare and propaganda. These frameworks must establish accountability not only for the creation of false content but also for its strategic deployment—whether by state actors, proxy groups, or decentralized networks.

Final Thought

The Iran–Israel conflict illustrates how AI has transformed the fog of war into a “fog of truth.” In this era, images and narratives are not just collateral—they are active battlegrounds. Unless international alliances, tech platforms, and governments forge collaboration around verification, transparency, and accountability, AI’s power to manufacture reality will remain a strategic weapon—lethal to both civilians and democracy.